5 Introduction to Instance-level Exploration

Instance-level exploration methods help us understand how a model yields a prediction for a particular single observation. We may consider the following situations as examples:

- We may want to evaluate effects of explanatory variables on the model’s predictions. For instance, we may be interested in predicting the risk of heart attack based on a person’s age, sex, and smoking habits. A model may be used to construct a score (for instance, a linear combination of the explanatory variables representing age, sex, and smoking habits) that could be used for the purposes of prediction. For a particular patient, we may want to learn how much do the different variables contribute to the score?

- We may want to understand how would the model’s predictions change if values of some of the explanatory variables changed? For instance, what would be the predicted risk of heart attack if the patient cut the number of cigarettes smoked per day by half?

- We may discover that the model is providing incorrect predictions, and we may want to find the reason. For instance, a patient with a very low risk-score experienced a heart attack. What has driven the wrong prediction?

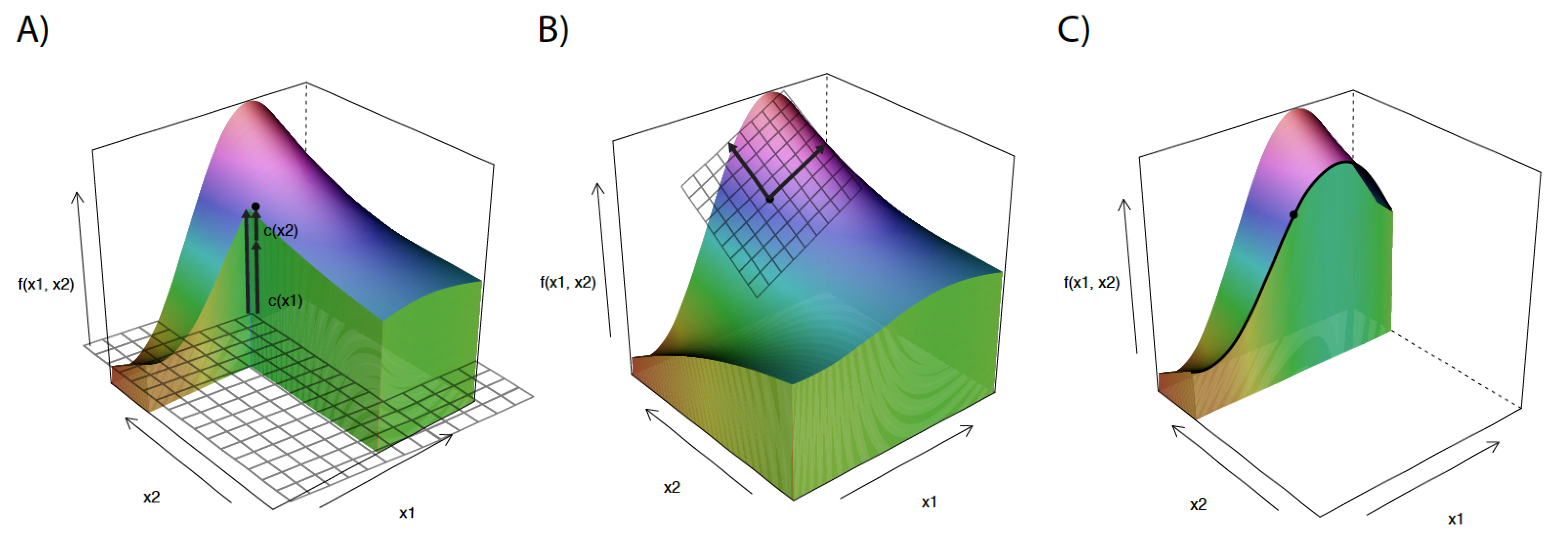

Figure 5.1: Illustration of different approaches to instance-level exploration. The plots present response (prediction) surface for a (black-box) model that is a function of two explanatory variables. We are interested in understanding the model response (prediction) at a single point (observation). Panel A illustrates the concept of variable attributions. The additive effect of each variable shows how does the prediction for the particular observation differ from the average. Panel B illustrates the concept of explanations through local models. A simpler glass-box model is used to approximate the black-box model around the point (observation) of interest. It describes the local behaviour of the model. Panel C presents a “What-if” analysis with a ceteris-paribus profile. The profile shows the model response (prediction) as a function of a single explanatory variable while keeping the values of all other explanatory variables fixed.

In this part of the book, we describe the most popular approaches to instance-level exploration. They can be divided into three classes:

- One approach is to analyze how does the model’s prediction for a particular instance differ from the average prediction and how can the difference be distributed among explanatory variables? This method is often called the “variable attributions” approach. An example is provided in panel A of Figure 5.1. Chapters 6-8 present various methods for implementing this approach.

- Another approach uses the interpretation of the model as a function and investigates the local behaviour of this function around the point (observation) of interest \(\underline{x}_*\). In particular, we analyze the curvature of the model response (prediction) surface around \(\underline{x}_*\). In case of a black-box model, we may approximate it with a simpler glass-box model around \(\underline{x}_*\). An example is provided in panel B of Figure 5.1. Chapter 9 presents the Local Interpretable Model-agnostic Explanations (LIME) method that exploits the concept of a “local model”.

- Yet another approach is to investigate how does the model’s prediction change if the value of a single explanatory variable changes? The approach is useful in the so-called “What-if” analyses. In particular, we can construct plots presenting the change in model-based predictions induced by a change of a single explanatory variable. Such plots are usually called ceteris-paribus (CP) profiles. An example is provided in panel C in Figure 5.1. Chapters 10-12 introduce the CP profiles and methods based on them.

Each method has its own merits and limitations. They are briefly discussed in the corresponding chapters. Chapter 13 offers a comparison of the methods.